- #WEB SCRAPER CLICK BUTTON HOW TO#

- #WEB SCRAPER CLICK BUTTON INSTALL#

- #WEB SCRAPER CLICK BUTTON FULL#

- #WEB SCRAPER CLICK BUTTON CODE#

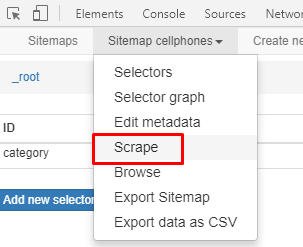

Searches for anchor elements based on a match of their text content Searches for elements based on an XPath expression Searches for elements based on their name attribute Searches for elements based on their HTML ID They are pretty similar, with the difference that the former looks for one single element, which it returns, whereas the latter will return a list of all found elements.īoth methods support eight different search types, indicated with the By class. WebDriver provides two main methods for finding elements. For example, you can right click the element in the inspector and copy its absolute XPath expression or CSS selector. Once you have found the element in the DOM tree, you can establish what the best method is, to programmatically address the element. A cool shortcut for this is to highlight the element you want with your mouse and then press Ctrl + Shift + C or on macOS Cmd + Shift + C instead of having to right click and choose Inspect every time.

#WEB SCRAPER CLICK BUTTON HOW TO#

If you are not yet fully familiar with it, it really provides a very good first introduction to XPath expressions and how to use them.Īs usual, the easiest way to locate an element is to open your Chrome dev tools and inspect the element that you need. Particularly for XPath expression, I'd highly recommend to check out our article on how XPath expressions can help you filter the DOM tree. or use CSS selectors or XPath expressions.filter for a specific HTML class or HTML ID.There are quite a few standard ways how one can find a specific element on a page. test cases need to make sure that a specific element is present/absent on the page). Naturally, Selenium comes with that out-of-the-box (e.g. For that reason, locating website elements is one of the very key features of web scraping. In order to scrape/extract data, you first need to know where that data is.

#WEB SCRAPER CLICK BUTTON FULL#

#WEB SCRAPER CLICK BUTTON CODE#

When you run that script, you'll get a couple of browser related debug messages and eventually the HTML code of. Chrome(options =options, executable_path =DRIVER_PATH)

#WEB SCRAPER CLICK BUTTON INSTALL#

To install the Selenium package, as always, I recommend that you create a virtual environment (for example using virtualenv) and then:įrom import Options

This particularly comes to shine with JavaScript-heavy Single-Page Application sites. Executing your own, custom JavaScript codeīut the strongest argument in its favor is the ability to handle sites in a natural way, just as any browser will.

Selenium provides a wide range of ways to interact with sites, such as: Rarely anything is better in "talking" to a website than a real, proper browser, right? for taking screenshots), which, of course, also includes the purpose of web crawling and web scraping. In the meantime, however, it has been adopted mostly as a general browser automation platform (e.g. Originally (and that has been about 20 years now!), Selenium was intended for cross-browser, end-to-end testing (acceptance tests). Selenium can control both, a locally installed browser instance, as well as one running on a remote machine over the network. The Selenium API uses the WebDriver protocol to control web browsers like Chrome, Firefox, or Safari. It supports bindings for all major programming languages, including our favorite language: Python. Selenium refers to a number of different open-source projects used for browser automation. Today we are going to take a look at Selenium (with Python ❤️ ) in a step-by-step tutorial. In the last tutorial we learned how to leverage the Scrapy framework to solve common web scraping tasks.

0 kommentar(er)

0 kommentar(er)